Turning conversation into collective memory

PURPOSE

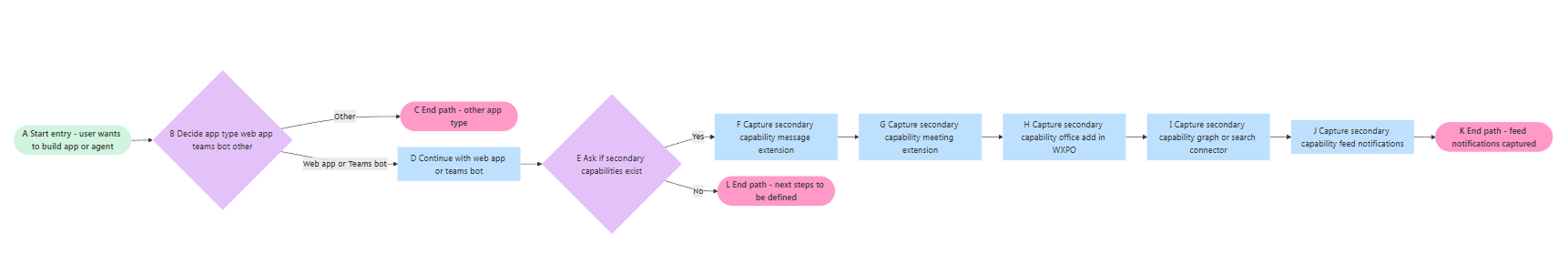

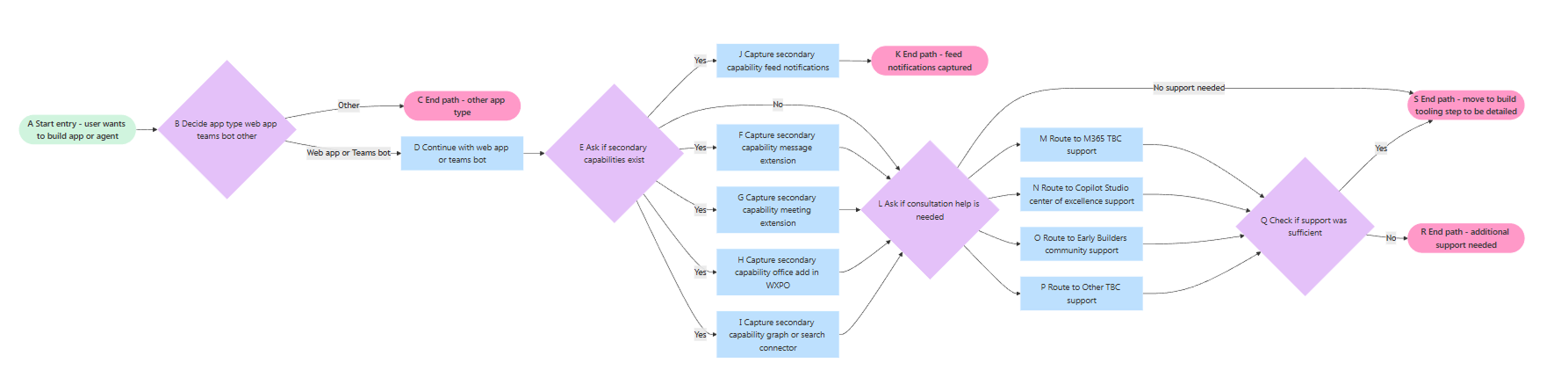

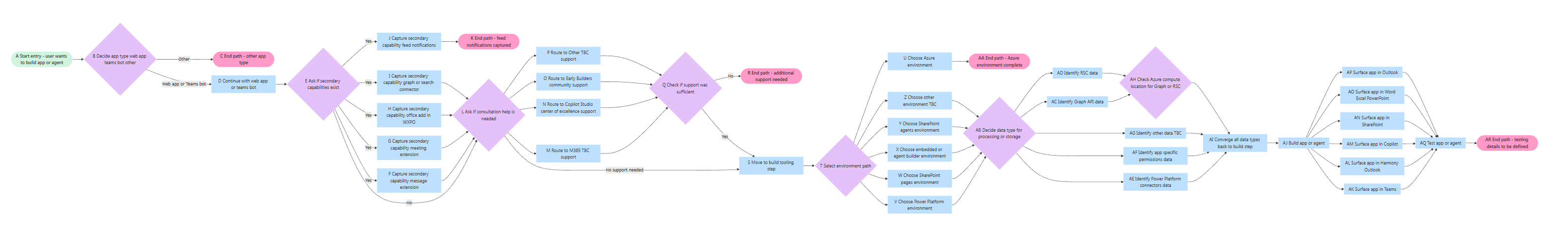

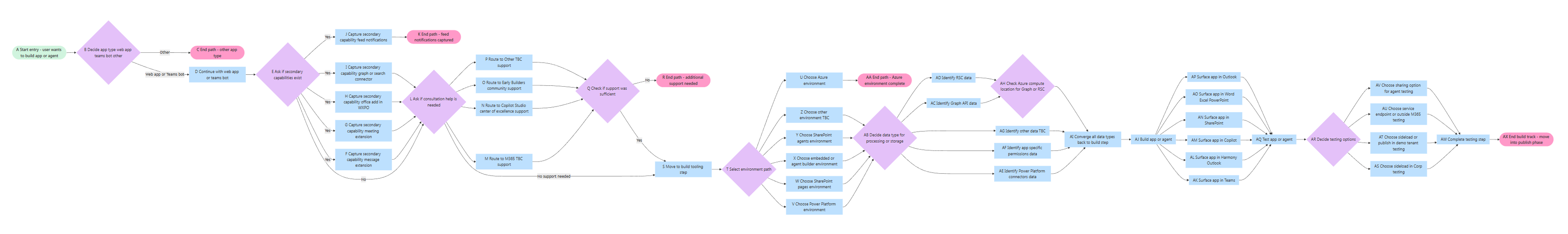

Design an AI meeting assistant that listens to live discussions, translates them into structured flow diagrams, and exports actionable tasks, turning otherwise transient conversations into persistent, shareable insight.

MY ROLE

As UX Strategy and Interaction Design Lead, I defined the product concept, agent behavior, and human-AI interaction model. My work focused on orchestrating how multiple probabilistic systems: transcription, semantic parsing, and visualization, collaborated to preserve human intention without overwhelming users with automation.

This included:

Defining the agent's behavior and translation to diagram.

Establishing feedback and confirmation loops to ensure the model’s confidence thresholds aligned with user trust.

Designing for traceability and transparency, ensuring every AI decision was visible and reversible.

TEAM

1 designer (myself), 5 AI developers, 1 technical architect, 2 operations SMEs, and 1 engagement sponsor.

Timeline: 8-week proof-of-concept phase.

OUTCOME

A working prototype demonstrating real-time speech-to-diagram translation with editable exports, validated through live pilot sessions and later adopted as a reference model for other enterprise workflow agents.

ROLEUX Lead

CLIENTGlobal Fortune 50 Technology Company

DATE2025